Understanding the Confused Deputy Problem

The ‘confused deputy problem’ serves as a classic narrative for explaining privilege escalation in the context of cybersecurity. It describes a scenario where a program, which we’ll call the ‘deputy,’ has certain permissions that are exploited by a malicious actor, effectively making the well-intentioned program act on the attacker’s behalf. While originally designed to perform legitimate functions, this program becomes “confused” when it is tricked into misusing its privileges, leading to unauthorized actions.

The Relevance in Large Language Models

When considering Large Language Models (LLMs) in this light, we begin to see how they might act as confused deputies. When granted excessive privileges, an LLM could unwittingly become a tool for executing commands or access control decisions that benefit an attacker. This risk highlights the importance of carefully managing the privileges assigned to LLMs, ensuring they operate within a secure and controlled environment.

The Pitfalls of Insecure Output Handling

Having explored the confused deputy problem, we now examine Insecure Output Handling in Language Models (LLMs). In the third installment of our series on the OWASP Top 10 for LLM applications, we address the imperative to safeguard against the misuse of LLM outputs. As the content generated by LLMs is driven by user prompts, it gives users a form of indirect access to underlying functions. If not properly validated and sanitized, these outputs can open the door to a range of attacks, such as XSS and CSRF, or more critical attacks targeting backend systems, such as remote code execution and privilege escalation.

Analyzing Security Risks with Real-world Examples

- Direct entry of LLM-generated output into system commands or functions like ‘exec’ or ‘eval’ can lead to unauthorized execution of code remotely. To elaborate, imagine a situation where an LLM is used as a coding assistant. An unsuspecting user might request a snippet of code to perform a task. If the LLM’s response includes system-level commands and the user unknowingly inputs this into their command line or script, it could trigger actions that compromise their system.

- Creation of JavaScript or Markdown by the LLM that, when sent back to the user, can be executed by the browser, potentially causing Cross-Site Scripting (XSS) attacks. Consider a scenario where a user asks the LLM to help with web development tasks. The LLM obliges by crafting a piece of JavaScript or Markdown. If this code contains malicious scripts and the user incorporates it into their web application, it could be executed by other users’ browsers, leading to a breach of security. This can also include unexpected interactions such as stealing cookies, session tokens, or even defacing websites.

- When third-party plugins skimp on input validation, they leave the door open for security issues. For example, consider a plugin that formats user comments on a forum. If an LLM provides a response containing executable code and the plugin doesn’t scrub the code, it could go live. Once on the forum, this code might execute when read by others, perhaps stealing information or corrupting data, demonstrating that such plugins must rigorously sanitize inputs to prevent security breaches.

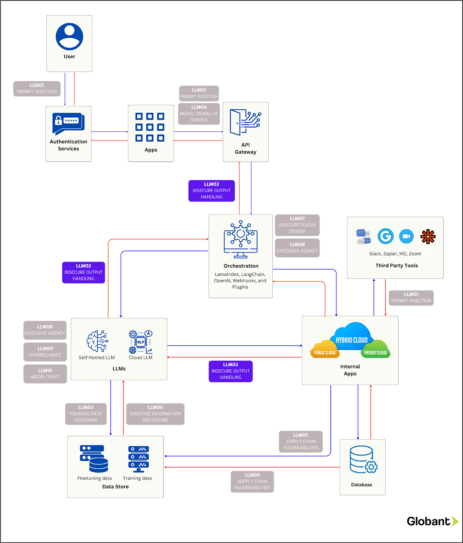

The following diagram is meant to guide you through the pathways an attacker might exploit this specific vulnerability within the operation of an enterprise application. Pay attention to “LLM02: Insecure Output Handling” highlighted within the diagram.

Reference: Original diagram.

Proactive Measures and Best Practices

- Approach every interaction with the model with skepticism, employing a zero-trust policy that assumes no user, system, or process – including the model – is inherently secure. Scrutinize all outputs from the model with thorough validation processes to preemptively detect and neutralize security risks before they reach any system function.

- The OWASP Application Security Verification Standard (ASVS) is a set of security benchmarks designed to fortify software by dictating best input validation and sanitization practices. Refer to these guidelines to encode outputs from models, which is crucial in guarding against the execution of unintended JavaScript or Markdown by user browsers. The ASVS provides comprehensive strategies for encoding, ensuring that the data returned to users is not only functional but secure.

A Summary and Road Ahead

As we’ve explored the ‘confused deputy problem’ and insecure output handling in LLMs, we’ve highlighted the necessity of controlled permissions and diligent output management to prevent unauthorized actions. With practical instances, we illustrated how LLMs could be weaponized if not properly safeguarded.

Having addressed two OWASP vulnerabilities in this series on LLM application security, our upcoming articles will continue this vital discourse, unpacking the remaining vulnerabilities and fortifying our cyber defenses. Keep an eye out for the forthcoming installment in our series addressing cybersecurity concerns in LLM applications.