Introduction: The importance of having access to real-time data

Increasingly organizations need to have access to real-time, or near real-time, data. However, in many cases there are technological challenges in achieving this, such as issues with data quality and the prevalence of legacy platforms. In many technology environments, data is sent to a data warehouse in a batch process that is executed hourly, daily, or executed in some other periodic fashion. This approach is often accepted because most reports don’t depend on recent or real-time data to provide useful information. But this isn’t the case in all scenarios.

For example, here at Globant we worked with an organization in the educational sector. They faced a scenario where students who used their tools were answering quizzes based on single option questions. As soon as they finished the quiz, the teacher needed to see their performance. It wasn’t useful for the teacher to wait until the batch process had finished at some later time to see the students’ results.

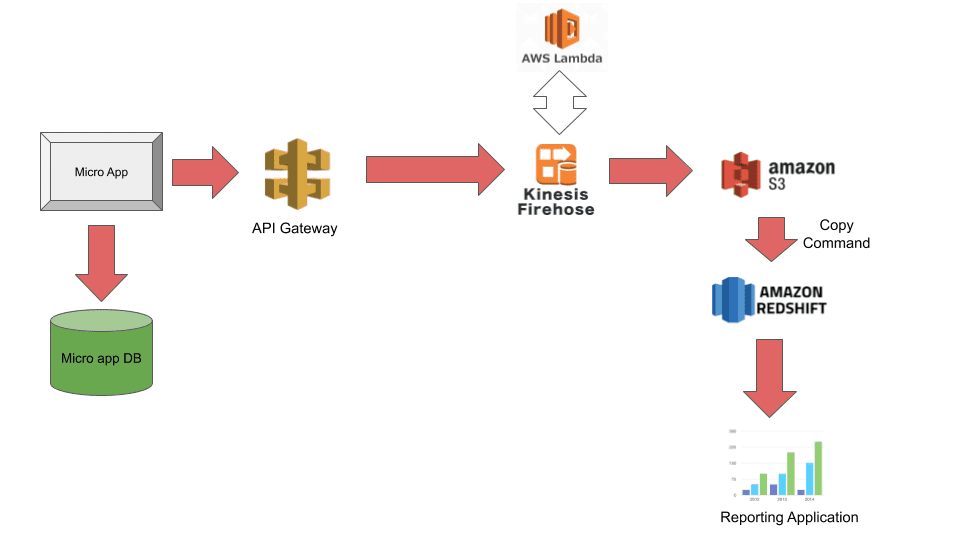

To help our client obtain real-time data, we set about complementing their data warehouse tool, as part of their AWS infrastructure, with other technologies from Amazon, to define an architecture that enabled them to make data available almost immediately. This meant they did not have to have a scheduled process or a job that had to check if there’s new data to send to the data warehouse. So in this article, we’ll explain how we created this architecture, using the example of the educational organization and student quizzes. It’s an approach that requires very little coding.

The technologies involved in the architecture

Let’s first examine the technologies involved:

- API Gateway. According to the online documentation, “Amazon API Gateway is a fully managed service that makes it easy for developers to create, publish, maintain, monitor, and secure APIs at any scale. APIs act as the “front door” for applications to access data, business logic, or functionality from your backend services.” API Gateway allows you to easily connect the API to different AWS services such as Kinesis Firehose, Lambda Functions.

- Kinesis Firehose. According to its documentation, “Amazon Kinesis Data Firehose is the easiest way to reliably load streaming data into data lakes, data stores, and analytics tools”. Kinesis Firehose makes ingestion of streaming data into storage systems such as Amazon S3, AWS Redshift, and Amazon Elasticsearch easy.

- AWS Lambda. Based on the AWS official documentation, “AWS Lambda lets you run code without provisioning or managing servers … You can set up your code to automatically trigger from other AWS services or call it directly from any web or mobile app”. Basically, you can upload your own code to AWS infrastructure and you don’t have to worry about setting up the servers to execute this code.

- Amazon S3. The documentation says: “Amazon Simple Storage Service (Amazon S3) is an object storage service that offers industry-leading scalability, data availability, security, and performance”. S3 is used to store any kind of file.

- Amazon Redshift. According to the Amazon Redshift Cluster Management Guide: “Amazon Redshift is a fully managed, petabyte-scale data warehouse service in the cloud”. The data warehouse is the place used to do reporting and analytics.

- Kinesis Data Streams. Based on the official documentation: “Amazon Kinesis Data Streams is a massively scalable and durable real-time data streaming service. KDS can continuously capture gigabytes of data per second from hundreds of thousands of sources”. KDS is used to transport data in real-time and can be configured to transport large amounts of information.

The quiz micro-app first saves the transactional data in its database for application usage. Then, the application sends the data to a REST endpoint using API Gateway that contains a proxy to send the information to Kinesis Firehose. We recognized it was possible for the volume of data generated by the micro application to increase over time, and that’s why the usage of API Gateway made sense because the API configuration can be modified to make the switch between calling to Kinesis Firehose or Kinesis Data Streams.

Kinesis Firehose allows producers to send streaming data to destinations such as S3, Elasticsearch, and Redshift. Firehose has a limit of 5,000 records/second and 5 MB/second. If the application’s volume is higher than Firehose’s limit, the API integration can be changed to call Kinesis Data Streams instead of calling Firehose directly. If the API is configured to integrate with Kinesis Data Streams, this technology has to call Kinesis Firehose anyway to save the data in its final destination.

Any configuration made to the API Gateway is transparent to the quiz micro-app since the application only cares to call the endpoint and get a successful response.

In our example, at the early stage of the project, the volume of data was not very high, so using Kinesis Firehose was a good option. We didn’t expect that the volume of data would increase quickly.

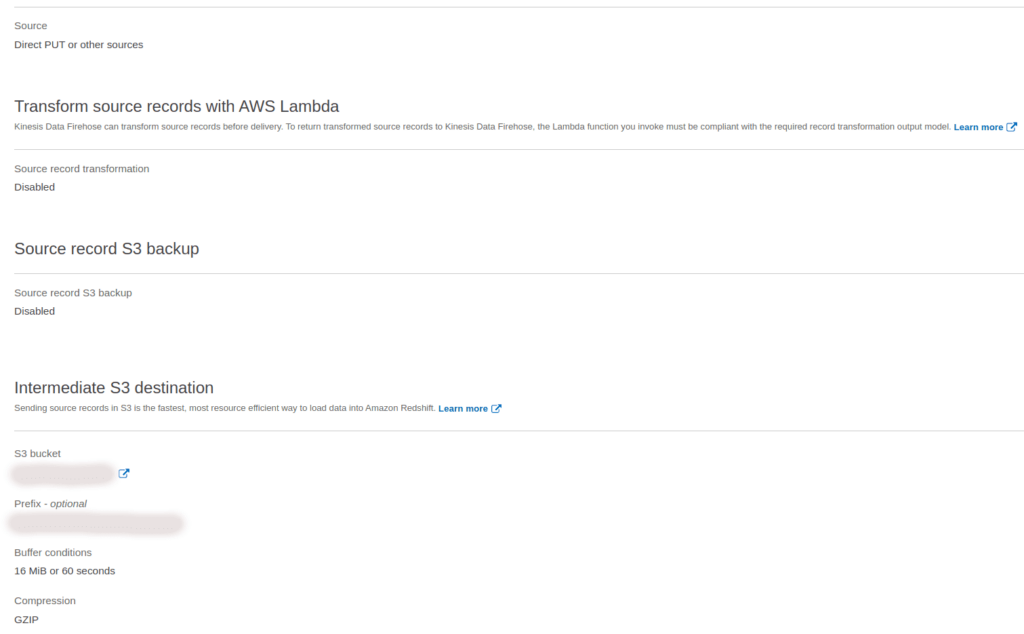

If you need to transform the data records, Kinesis Firehose can do that by using Lambda functions. Once the Lambda function executes its logic, transformed data is sent back to Firehose. In our project example, we didn’t need to use AWS Lambda to make transformations since the data from the micro app was saved as-it-is in Redshift’s destination table. However, the usage of Lambda functions helps to enrich and transform data in case it’s not possible to do so in the data source.

Configuration for Kinesis Firehose in the AWS web console

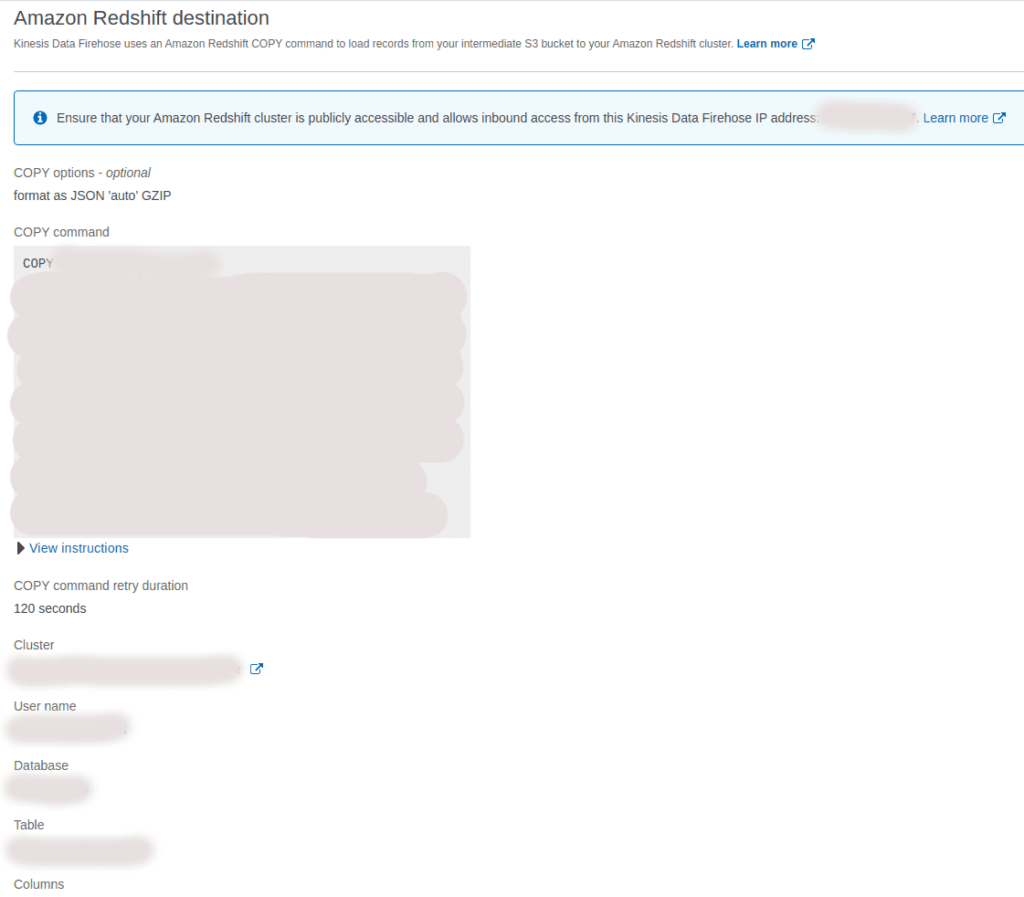

Firehose saves the data into a file that is located in an intermediate S3 bucket. The reason for the intermediate bucket is to use the COPY command from Redshift. It performs better using COPY command rather than doing several INSERT commands.

One configuration item defines when the data must be sent to Redshift. The data will be saved either when the buffer size reaches a certain size (1-128 MiB) or the buffer interval reaches a certain time (60-900 seconds).

Other configuration items that are related to Redshift are the cluster name, user name, destination database, and table. You can define which table’s columns are going to receive the information. As mentioned previously, data is saved in Redshift via the COPY command and you can define the format of the file. In our case, the format of the payload is JSON and the file stored in S3 is compressed. As long as you define COPY options and columns, the COPY command is generated automatically, so you don’t have to worry about writing the command from scratch.

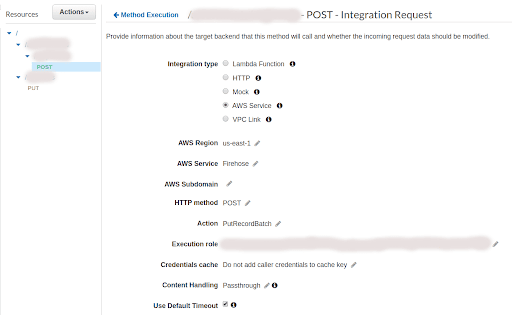

Configuration for API Gateway in the AWS web console

Once you have created the path, method, and API URL, the integration of the API with Firehose has to be done in the section Integration Request as shown in the following image.

Since the micro-app needs to deliver several records in the same request, the Action item for Firehose is PutRecordBatch. In case the payload only has one record, the value is PutRecord.

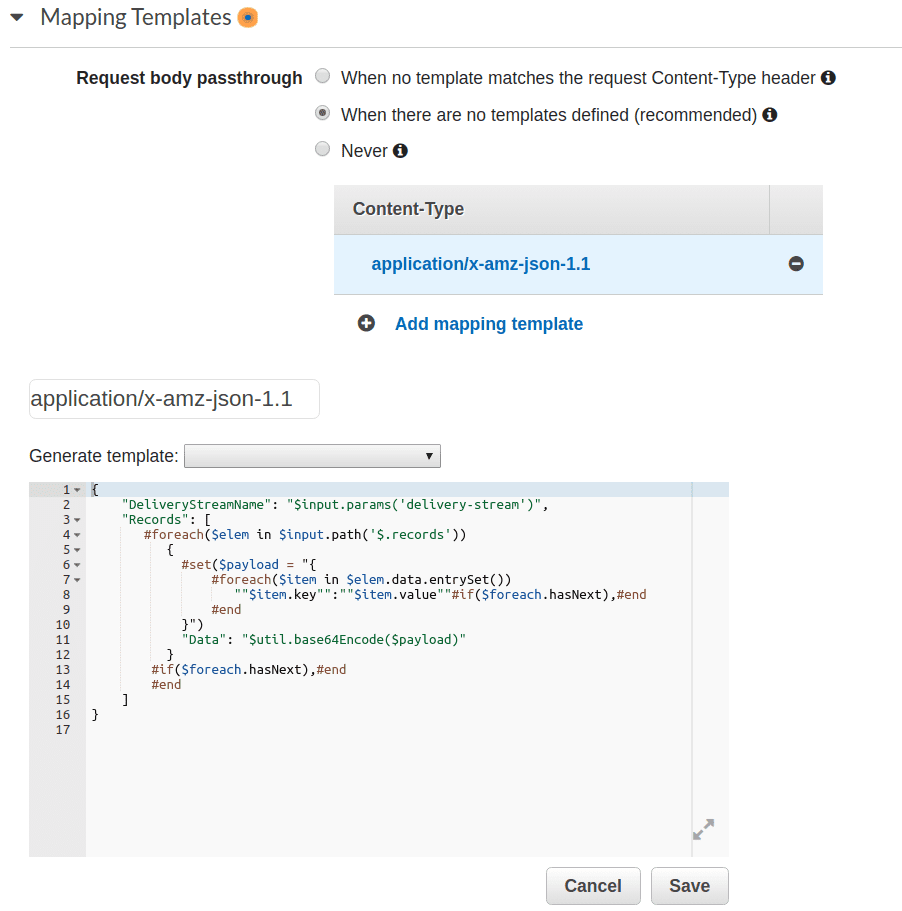

The next step is to create the request to send the payload to Firehose. On the same page, subsection Mapping Templates, the header “Content-Type” whose value is application/x-amz-json-1.0 is configured to take the micro-app payload, iterate over the records, and create the request.

The API should receive a parameter called delivery-stream which is the name of the Firehose stream. It’s mandatory to make the request to Firehose.

The template which is written in Velocity iterates over the records and encodes them in base64.

You can find the documentation for PutRecordBatch request in this link.

Step to read the data from Redshift

Finally, a reporting application such as AWS QuickSight, Tableau, or Power BI can query the results using the connectors that are available for Redshift.

Results based on executions

Based on the pipeline’s previous executions, the data takes around 70 seconds to be saved in Redshift when it’s sent from the micro application. For higher volumes (2,000 records per second), the data is saved after 110 seconds on average. Redshift can receive the file’s content in parallel with the COPY command so volume is not a problem to store large amounts to the data warehouse.

Conclusions and considerations

We successfully implemented this architecture with our client in the educational sector. The organization was then able to provide their users with reports containing real-time data. This made a significant difference, enabling teachers to provide better feedback to their students.

Based on our experience, I want to share some of our key learnings:

- The coding effort is very low to implement this architecture. On one side the micro app needs to send the data to the endpoint. On the other side, a Lambda function could be used to transform the data. But if no transformation is needed, the coding effort is even lower.

- You can do all the configuration for this architecture using the AWS web console or AWS Command Line Interface (CLI).

- It’s key to know the data volume in advance based on Firehose limits (5,000 records/second and 5 MB/second) to determine if the API Gateway can call Firehose directly or Kinesis Data Streams has to be used in the middle to support higher volumes. As mentioned above, the project’s initial volume didn’t surpass Firehose limits, so we haven’t yet tested integration with Kinesis Data Streams, but it’s mentioned in the reference section.

- The data sent by the micro-app is mapped to a destination table in Redshift. This means the payload has to fit the table structure. If the micro app can’t have all fields to be inserted into the table, the optional Lambda function triggered by Firehose can be created to add missing information to fit the destination structure.

- Buffer conditions in Firehose are very useful to determine how quickly the data is going to be sent to S3, and is determined by the data’s volume or buffer time. The minimum buffer size is 1 MB. If your application’s volume is equal or greater than 1 MB/sec, you won’t wait one minute to have this data stored. If your application’s volume is less than 1 MB/sec, the buffer time determines when the data is saved. However, although the buffer configuration depends on volume or time, application volume can make the pipeline save data in a shorter amount of time.

References

- Amazon Elasticsearch

- Amazon QuickSight

- Amazon Redshift

- Amazon Redshift Architecture

- Amazon Redshift Management Guide

- Amazon S3

- Apache Velocity

- API Gateway

- AWS Command Line Interface

- AWS Lambda

- Kinesis Data Streams

- Kinesis Firehose

- Power BI

- PutRecordBatch request

- Reference of COPY command in Redshift

- Tableau

- Writing to Kinesis Data Firehose using Kinesis Data Streams