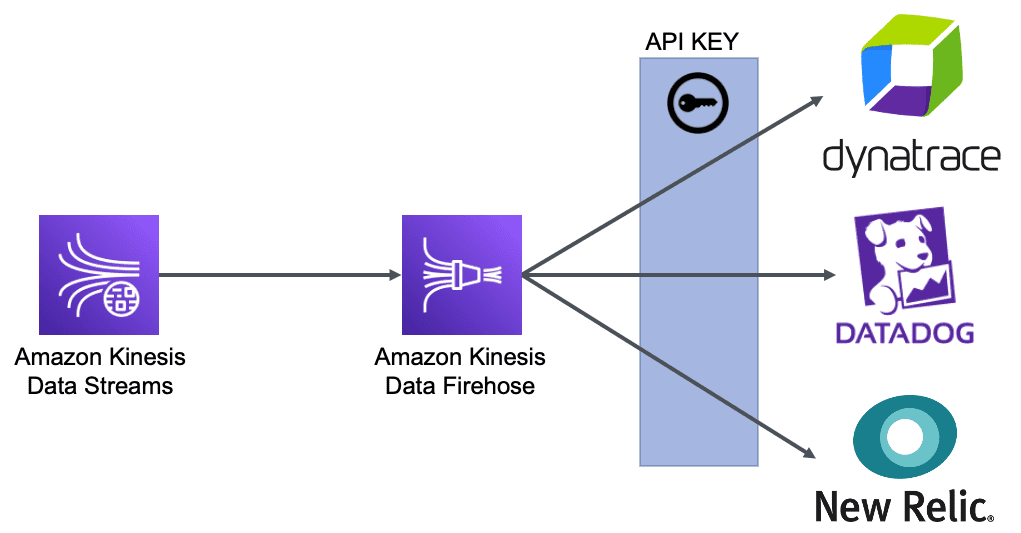

AWS Data and Analytics services have evolved continuously. Recently, for example, I have seen how Amazon Kinesis Data Firehose allows third-party destinations such as Dynatrace, Datadog, and NewRelic, among others. These new integrations will allow us to take our log and metric flows easily to these providers.

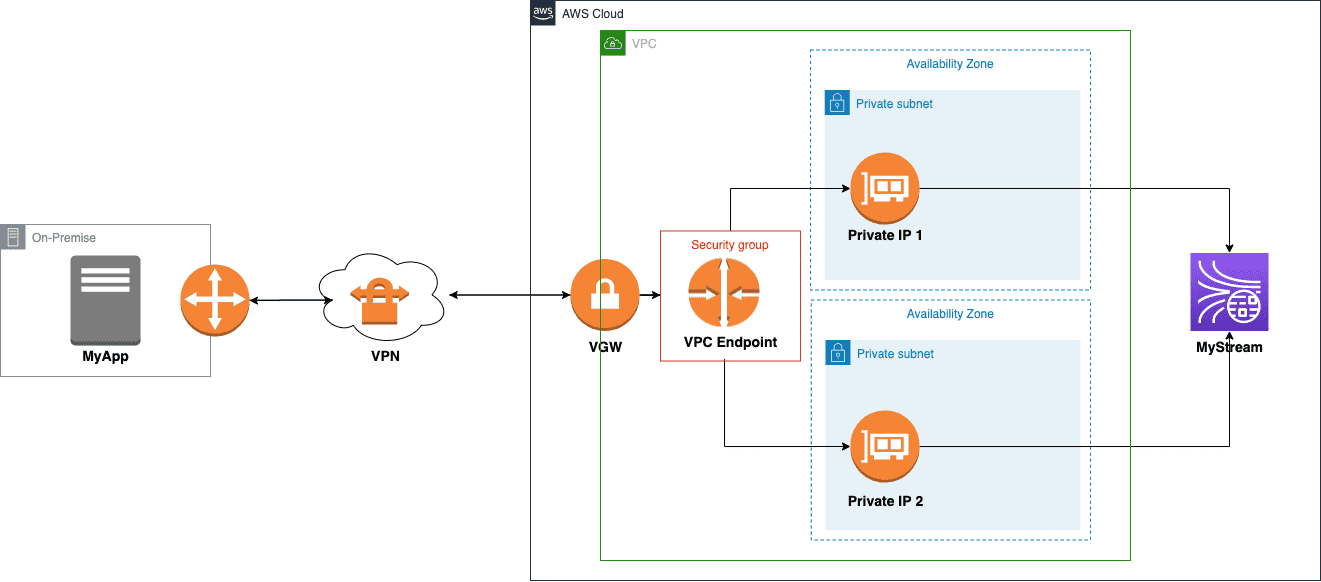

When I saw this new release, I began to work on an architecture that I had previously implemented (you can see the architecture in this link). Since we had an on-premise ingest of many logs to AWS through a VPN, I decided then to test the new functionalities and implement the integration with Datadog to see how it made the logs reach Datadog without taking advantage of what was previously implemented.

I made the integration with Datadog to test this new functionality and then be able to take the advantages that this tool provides:

- Log parsing.

- Log enrichment.

- Generation of metrics.

- Filtering and prioritization.

How does the integration work?

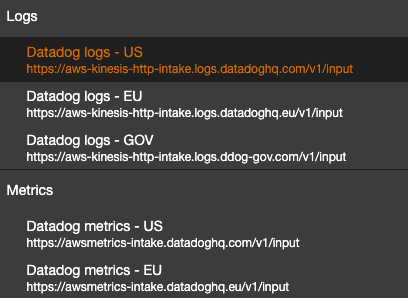

The integrations with these providers are all done through an API KEY; you have to select the correct endpoint and use the API KEY generated from the service.

Datadog Integration

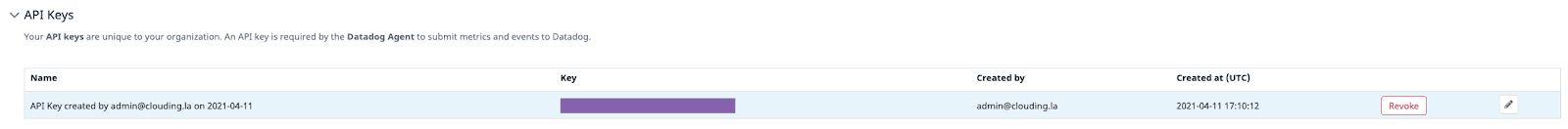

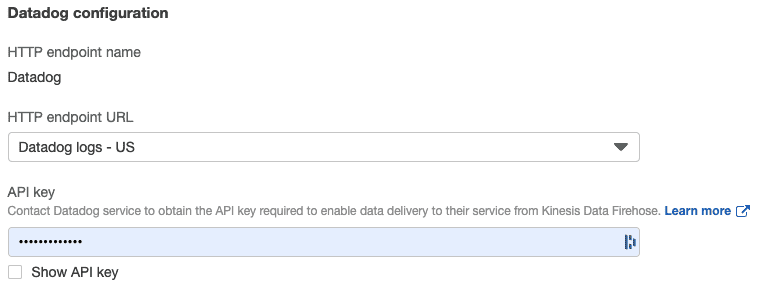

1- Go to the Datadog Configuration page.

2- Create an API KEY.

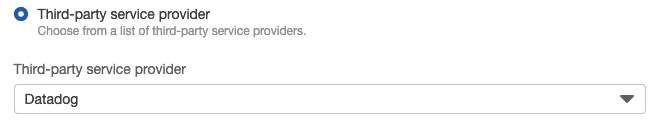

3- Create Amazon Kinesis Data Firehose and select the endpoint for Datadog.

4- Select the HTTP endpoint URL.

5- Put the API KEY from step 2.

Check the integration

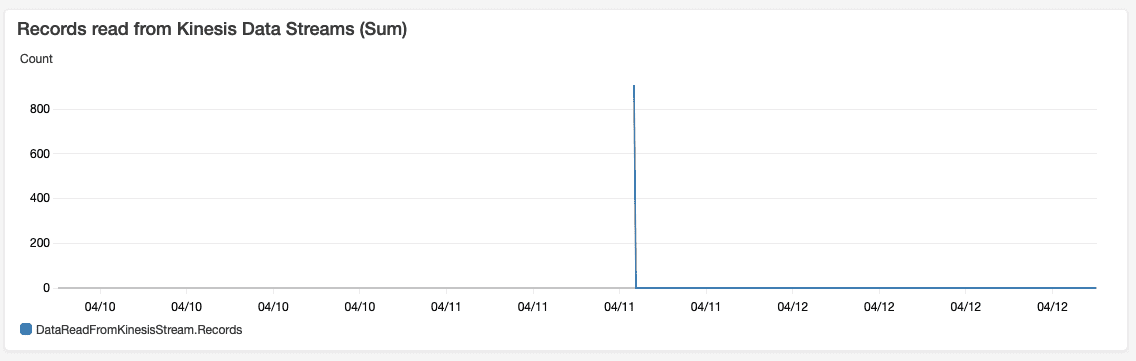

Check in the Amazon Kinesis Data Firose by monitoring the Records read from Amazon Kinesis Data Stream (Sum).

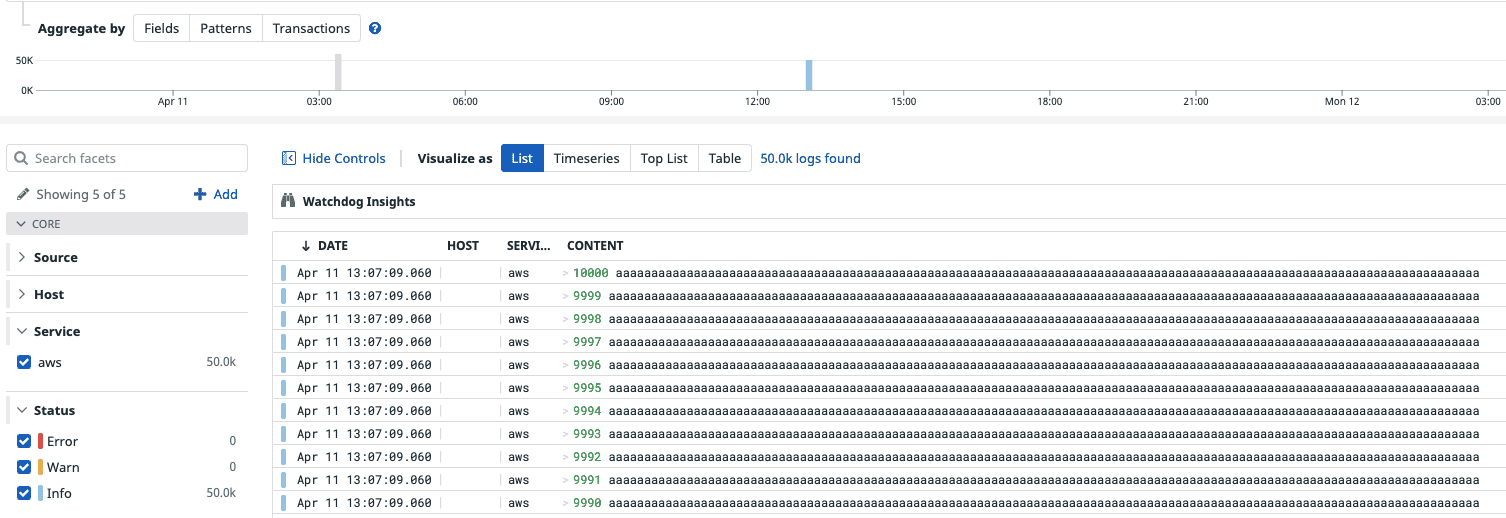

Check on Datadog logs. The logs in the image are generated from the sample KPL.

Once you have all the logs in Datadog, you can take advantage of the functionalities it provides.

Final architecture and conclusions

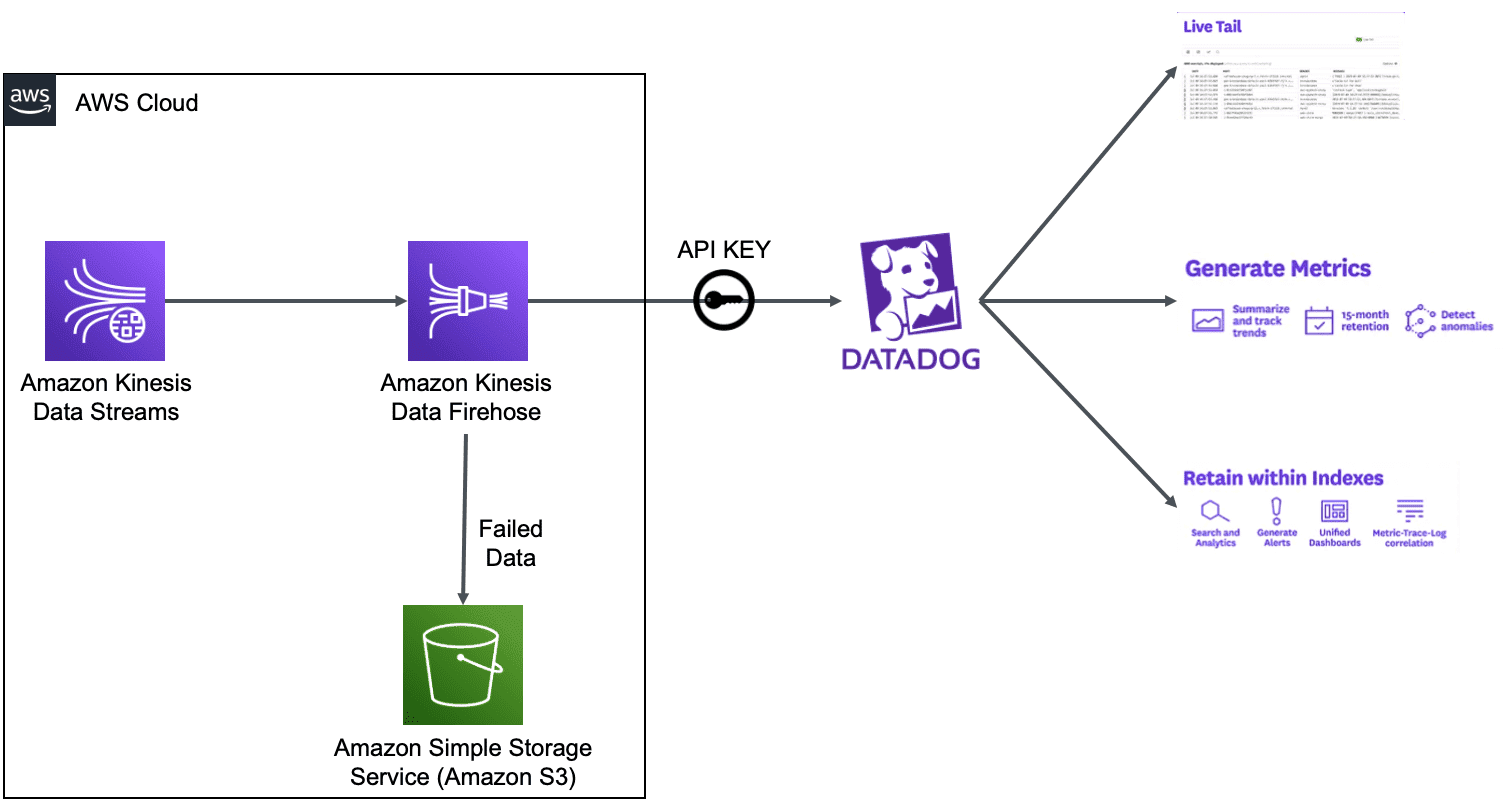

In the architecture above, Amazon Kinesis Data Streams receive the information from an on-premise application running the KPL. This information is transmitted over the VPN and using a VPC endpoint; it reaches the Kinesis data streams. It feeds the entire Amazon Kinesis Data Firehose flow to reach the Datadog.

Here are some conclusions and highlights:

1- Integrations for managing logs and metrics are easier through Amazon Kinesis Data Firehose.

2- All integrations must use an API KEY; you must consult third-party provider documentation to do this.

3- These integrations will allow you to take advantage of the functionalities provided by these third-party providers in the management of logs and monitoring.

4- Data that could not be sent to the third-party provider can be stored in a local bucket.

5- It will be possible to centralize N amount of log sources in an Amazon Kinesis Data Stream and use Amazon Kinesis Data Firehose to be sent to Datadog. This use case is widespread since many companies use these tools from other providers as their monitoring and logging core.

6- In this post, I talk about Dynatrace, Datadog, and NewRelic. Still, you have to consider other third-party integrations such as LogicMonitor, MongoDB Cloud, Splunk, and Sumo Logic.

7- Many people might wonder why we do not directly install the Datadog agent on the instance and avoid the use of Kinesis. In this scenario, using Amazon Kinesis Data Stream and Amazon Kinesis Data Firehose allows more flows within AWS that can use the logs, such as a flow that feeds S3 with the logs using Amazon Quicksight and visualizes the information.