Introduction

Many of us use Amazon Aurora every day for different projects. Without doubt, it is one of the best relational databases currently on the market. However, it is often a bit complex to understand the database’s pricing, and even more difficult is to identify cost-saving strategies.

In this post, I will show you how to identify and optimize the cost of an Aurora Database in which the cost doubled in 1 month as a result of I/O consumption.

The points that I will cover in this article are:

- Current workload

- Database cost increase

- Identify root cause

- Strategies to reduce I/O consumption cost

- Lessons learned

1. Current workload

This particular database works in production and supports the operation of hundreds of thousands of users in Latin America. The database has the following characteristics:

- Instance type: db.r5.xlarge

- Write queries per second: 510

- Read queries per second: 6

- Average connections to the DB: 100

- Type: Aurora Provisioned MySQL.

The workload receives a lot of queries from multiple sources, such as web servers.

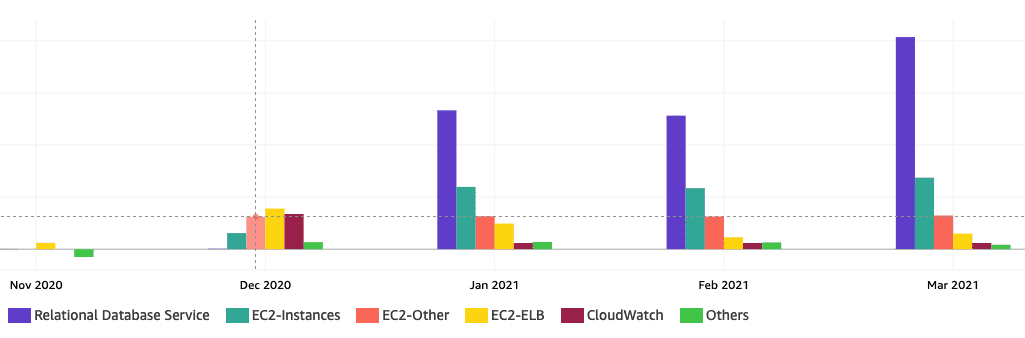

2. Database’s cost increase

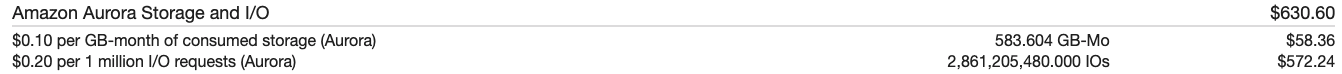

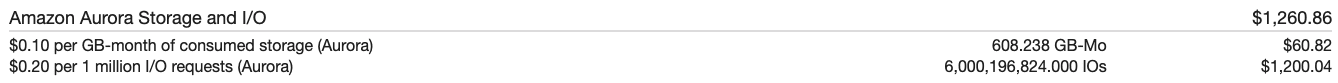

The database’s cost began to increase suddenly, rising to approximately 800 USD in a month. When I reviewed it in detail, I realized that the cost was due to I/O requests, which increased from 2,811,123,309 IOs to 6,000,196,824 IOs in one month.

AWS Cost Explorer report.

After reviewing the AWS Cost Explorer and identifying the RDS cost increase, I checked the monthly billing that reflected this increase.

February 2021

March 2021

3. Identify Root Cause

The most important thing when we have increased costs in our services is to be able to identify the root cause. In order to find the root, we must start asking ourselves questions about our workload:

- What recent changes have we made to the workload?

- Are there changes in our business KPIs, for example, more users on our application?

After doing this, we must rely on all the tools that AWS provides us to identify the cause. Some of those tools are:

CloudWatch Metrics

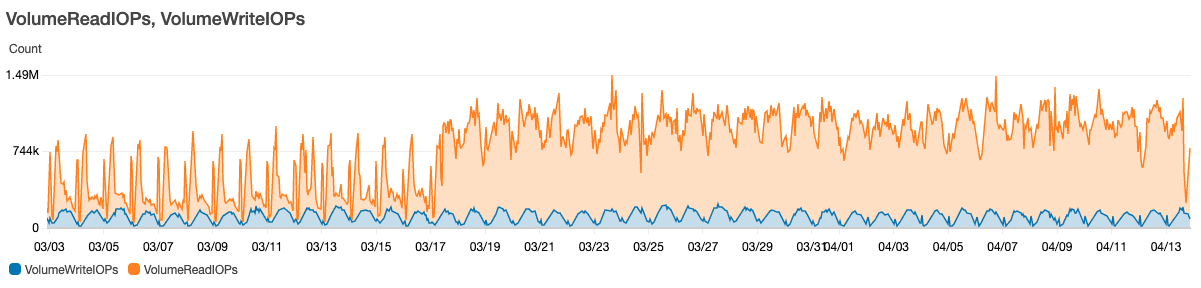

1- Check the CloudWatch metrics for VolumeReadIOPs and VolumeWriteIOPs. It will enable you to see the increase of the read operations.

Performance Insights

2- This tool does not currently support the version of Amazon Aurora that we are running. But it is a good option if you are having the same problem.

Audit logs + CloudWatch Insights

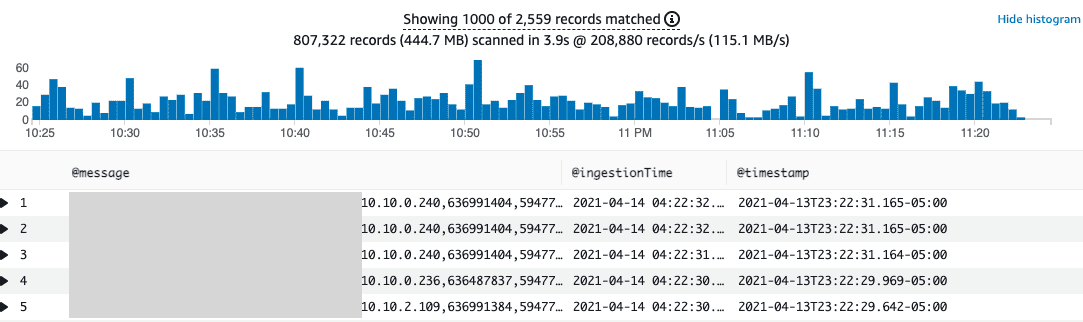

3- To identify the queries causing high consumption, we must enable the Audit logs in the database; once enabled, we must go to CloudWatch Insights and query the logs to find the queries that are being more recurrent in the database.

fields @message,@ingestionTime,@timestamp

| filter @message like /QUERY/

| limit 1000

As a result, this tool enables us to identify the queries running on the database and work to correct the problem.

4. Strategies to reduce I/O consumption’s cost

The best strategies for reducing the I/O consumption’s cost are:

1- Work closely with your database administrator and identify the queries that can be optimized.

2- Add a cache layer in front of the database like an ElastiCache Redis. But bear in mind the ElastiCache cluster’s cost and consider that you have to read the RDS to update the Cache database.

3- Bring this reporting layer to S3 and do the queries with Athena, connecting it to a visualization tool.

5. Lessons learned

There are four main takeaways I want to leave you with. They are:

1- When you need to do more detailed troubleshooting, temporarily enable the logs in the database; in this case, we use audit logs. Additionally, we could also enable slow queries, errors, and general logs. Note that leaving these logs permanently enabled will result in increased costs for CloudWatch Logs.

2- When we have high costs in our IO databases, understand how your workload is performing, investigate the consumption and correct it at the application level.

3- When the cost is due to reading operations, look for alternatives; for example, evaluate if you can use architecture with S3 + Athena for your reporting.

4- To control DB schema changes, use different tools, such as a Liquibase + Jenkins that allow controlling changes in DBs.