In performance testing, I have faced several projects in different stages: some mid-development and others at the end of their process, but hardly ever at the beginning. No matter the stage, the same questions always come up:

- When is the perfect time for testing?

- Should I complete the list of features and functionalities of my application before approaching the testing?

- Do I need to set up a replica of my production environment?

So, if you also are thinking about testing the performance of your application and you are concerned about these questions, let’s answer them, reviewing the pros and cons of some approaches.

Without further ado, let’s start going through the questions.

When is the perfect time for performance testing?

This is like deciding when is a perfect time to be parents… and the thing is, there is no perfect time! If you can save time and effort by doing it, then just pick the best or most efficient time to do it, and do it.

In my opinion, the sooner you can start, the better; however, some customers decide to perform this kind of testing at the end of the project before going live.

The usual approach: Testing as a final step of the project

In this approach, the customer gathers a set of test cases, and call on us to perform the test. For example:

- Load page.

- Create an account.

- Log in.

- Check the number of transactions per second an application can handle.

- Look at different items.

- Buy a product.

- Ask: is the architecture scalable?

- Change user profile data.

- Contact the support team.

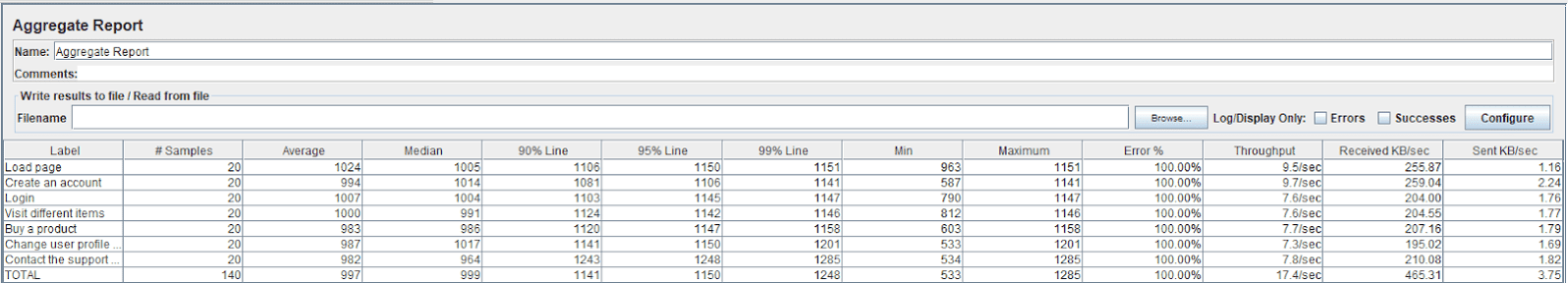

So, imagine we automate them, using JMeter:

And then we run them.

A disadvantage of this approach is…

The problem here is, if you run this amount of flows or scripts, finding a problem becomes a tedious task.

Especially when the complete test fails:

So, if we discover a bottleneck in our system, it’s really hard to know which of our functionalities is causing it, and finding which transaction is degrading the infrastructure could be a lengthy process.

In these cases, you should start to run the tests separately until you find the bad one. But don’t you think it would be better to have run these tests when they were developed, independently, instead of running all these things together, one month before going live? Well, yes.

Here’s a tip!

Something that could help in these situations is to choose just the scripts that impact your architecture in different ways. But if two or more scripts have the same impact on the way it works (i.e. they go against the same endpoints, transfer a similar amount of data, or same queries on the database), just choose one and give it the load equivalent of those two or more. Doing this you will reduce significantly the number of scripts to execute and analyze.

As an advantage…

Even though it’s cumbersome, could we say it is impossible to run testing at the last stage of the project? Hmm, no. We should still carry out our testing at the end, and that way we will be able to find issues that our customers will very much appreciate. As an advantage of this approach, maybe you would even be able to use the production environment (pre-production, or staging as we call it), and the test will be realistic enough. Same environment, same database; the perfect scenario to perform our testing. In fact, conducting testing at the end of the projects is the most common scenario.

On to the next question…

Should I complete the list of features and functionalities of my application before approaching the testing?

Of course not! Nowadays I’m working on a project that uses a microservices architecture, and I started doing tests almost at the beginning of the project.

Working at microservice level you don’t need to go through the application to capture and simulate the flow; on the contrary, you work in a very modular way. You just need the specification of the calls.

So I took advantage of that and I started with my testing at a very early stage.

I have planned three levels of testing:

- Microservices level

- Services level

- User flow level

I conducted all this with the same tool just to ease the tasks of continuous integration.

Let’s improve the conventional testing approach!

Each level has a different goal. For example, working at the microservices level you will test the microservice performance itself, maybe not looking for bottlenecks on the whole system, but measuring and trying to optimize the performance of the microservice. You can develop a script with the complete list of microservices calls in it.

How?

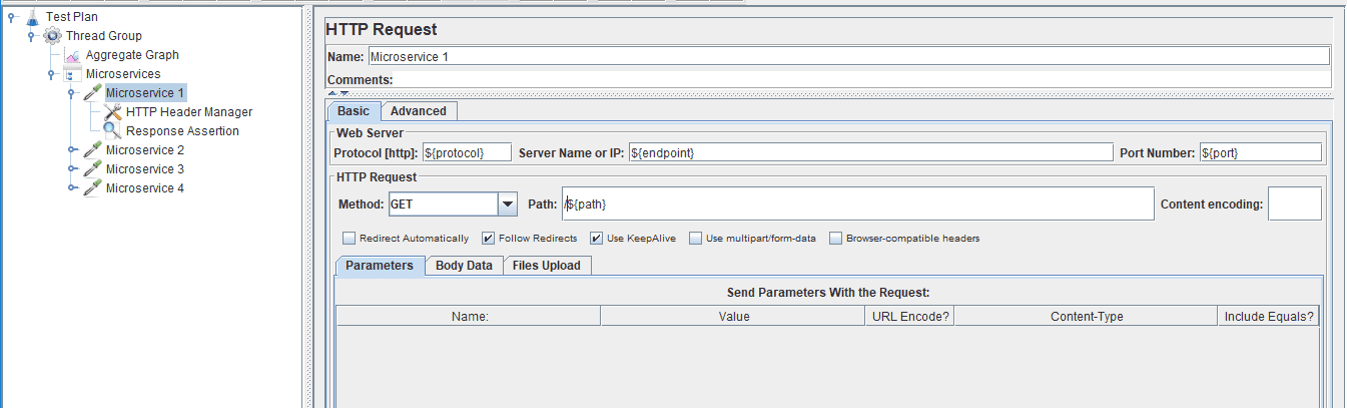

If you are asking how could you develop this method, it’s simple. As we have said before, we want to ease continuous integration tasks, so let’s use a tool that we can develop the three testing scripts levels I have listed. A tool that fits with this requirement is JMeter.

Using JMeter we can easily build a microservice call…

…given that:

- ${VariableName} is the way JMeter allows you to invoke a variable.

- ${protocol} is the protocol that your microservices use.

- ${endpoint} is the endpoint specification.

- ${port} is the port of the applications, you can let this field empty in case you don’t have any port to specify.

- ${path} is the path of the URL of the application.

If you have any concerns about how to configure this sampler, please check JMeter documentation.

In addition, as you can see in the above image, you can add a header manager in case you need it, just setting the name and the value of the header. Last but not least, remember to add an assertion to ensure that the microservice is answering well and on time.

Maybe you have already worked with Swagger or Postman, and so this is very similar. But this approach has the advantage of loading users or calls and measuring the results of the test, on the server and the client side.

Then, working at service level you will test how the integration of the microservices works. The goal here changes with respect to the previous goal mentioned. But the way to build the scripts is very similar.

And finally, working at user flow level is closer to the common way of testing, where the goal is to measure the performance of your application and the system as a whole. Regarding the scripting, these cases are not so simple. They will need a bit more logic to them, but nothing impossible. Fortunately, the JMeter community is big enough to support and help you!

Advantages/disadvantages of this “levels-based” testing approach

As you can see, we are including performance practices at different levels of the architecture. This, of course, is a great advantage, because when an issue appears in the highest layer, you will have more information about lower layers that definitely will help you to find the problem more easily.

But, on the other hand, a disadvantage could be that you will need to invest part of your time in maintaining the scripts. Why am I saying this? Well, if you start to carry out tests from the beginning of the project, the functionalities will certainly change, so your scripts probably will stop working.

To finalize this approach, it would be great to integrate these scripts with a CI Tool such as Jenkins. Keep reading Part 2 of the article to find out how to integrate these approaches with a CI/CD framework and keep digging deeper into the questions—how? when? where?—of testing the application’s performance.