Introduction

In our previous article, we described what a conversational user interface (CUI) is – explaining how a CUI can take the form of either being text-based (a chatbot) or voice based (a voice assistant). We also provided some initial ideas about how businesses can use them to improve how they communicate with, and interact with, their customers – for example, retailers can use CUIs to connect their customers directly with what they are looking for. In this article, we’ll explore natural language understanding, the technology behind CUI.

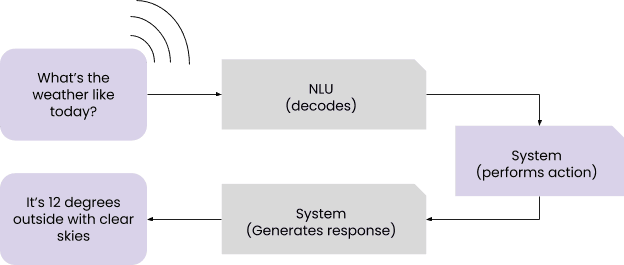

A CUI uses machine learning algorithms in the form of a natural language understanding (NLU), a program that decodes human language, in the form of text, and transforms that into system recognizable action intentions. These intentions are interpreted by programs used to perform actions on behalf of the user, be that within the system or with an external one. In short, we can see the CUI as a type of front end that uses language as an interface and as an output, with the NLU as the key piece for translating text.

How it works

As we established, natural language understanding is the heart of the conversational interface. It’s a machine learning tool whose primary purpose is to convert natural language into objects that our systems can handle.

But NLUs are not magically coded software that can understand what a user is saying on their own. Developers need to provide them with sets of training examples, just like any machine learning algorithm. After training, the NLU gains the ability to identify words or phrases, and map those to a particular user intention, represented in a system known object. These objects often come in pairs of intents and entities

A world of Intents and Entities

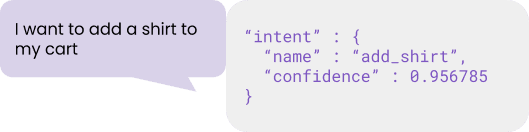

We have now distanced ourselves from the robust human language and come again to deal with computer language (or at least a human level of computer language). We can widely define an intent as a system representation of a user intended action. These intents are always business defined and they have at least two parameters: 1) the name of the intent; and 2) the confidence, which is the probability of such an intent being recognized accurately.

A common intent would look like this:

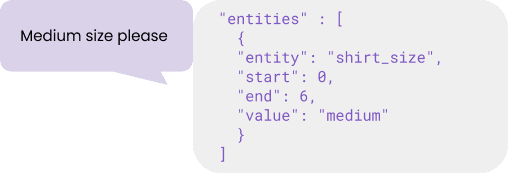

Entities on the other side are representations of valuable parameters relevant to the business which get encoded into objects as well. An entity could have the following representation:

How do you train natural language understanding?

As we mentioned earlier, in order for a NLU to understand our messages we need to provide it with training phrases. Most NLUs work the same way: define your intent name and add its training phrases.

Examples need to be as distinct as possible in order for the system to be broad when the time comes to detect such intent.

If you are going for a tool like Dialogflow, the graphical interface can literally walk you through the process and even do the training with the click of a button. In other cases, some open source frameworks like RASA require a more developer-oriented approach, in which you need to complete multiple files with the required examples and then a script is needed to be triggered for the training of the tool itself.

The result: A trained model

After the training routine is done, you will be left with a trained model. What is a model?

Well, the model contains the resulting algorithm that can actually do the language understanding once it’s loaded into the NLU. This model contains everything the program needs to process text input.

Keep in mind that most NLUs are not multilanguage. This means that you have to have as many models (and training examples) as languages you intend to support.

Where do we go now?

By now you should have a better understanding of how a system understands either a voice command or a text command and how the process of creating that interaction works. In the last article of this introductory series we will cover some of the challenges of creating human-like experiences so you can start your own conversational interface.